Deep dive clickstream analytic series: Serverless web console

Overview

This post explores the web console module of the clickstream solution.

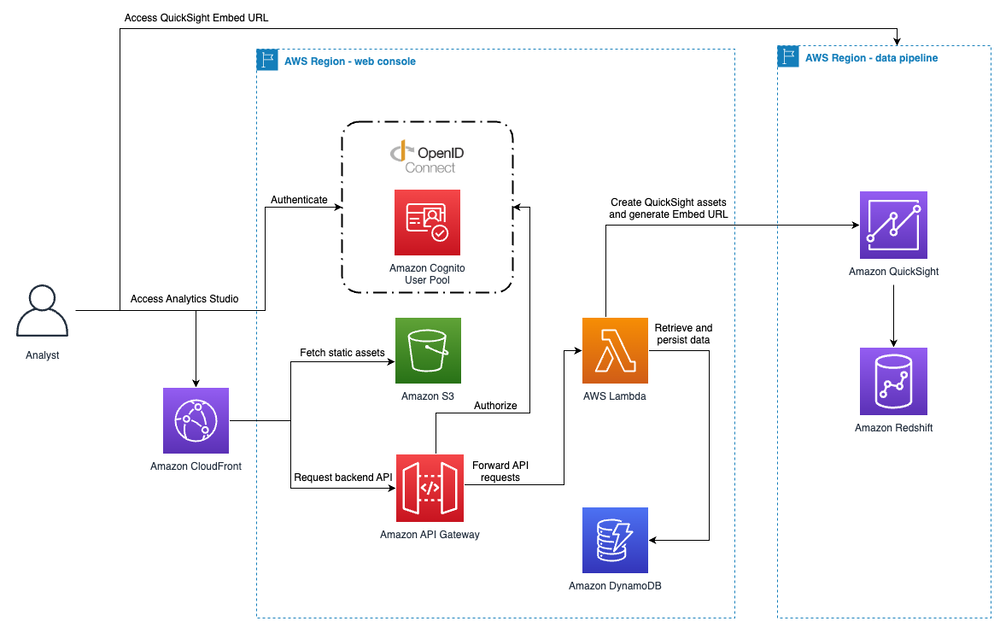

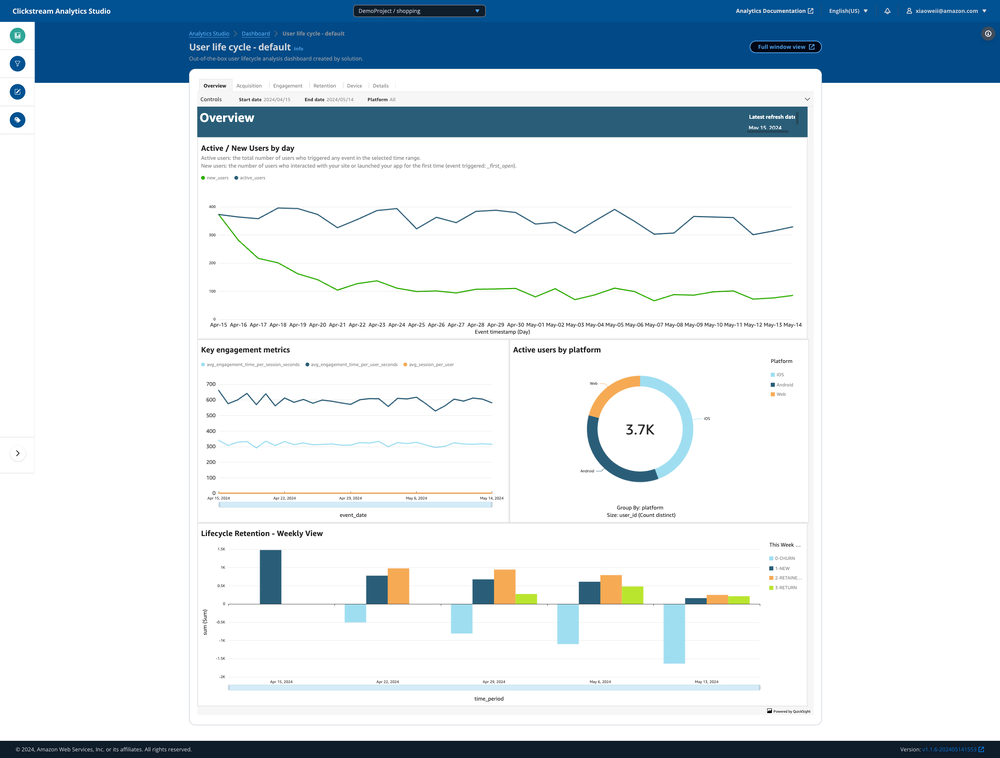

The web console allows users to create and manage projects with their data pipeline, which ingests, processes, analyzes, and visualizes clickstream data. In version 1.1, the Analytics Studio was introduced for business analysts, enabling them to view metrics dashboards, explore clickstream data, design customized dashboards, and manage metadata without requiring in-depth knowledge of data warehouses and SQL.

One code base for different architectures

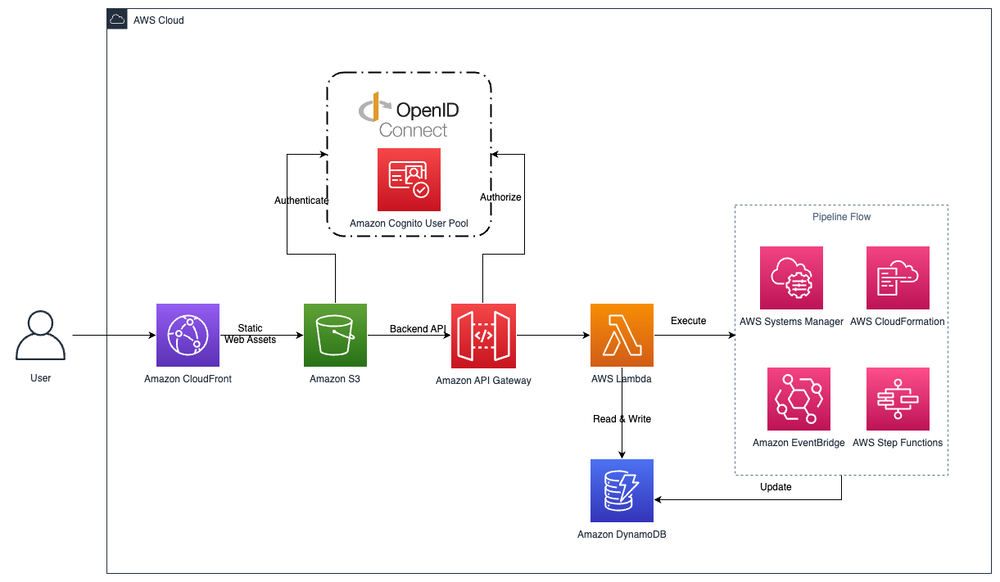

The web console is a web application built using AWS serverless technologies, as demonstrated in the Build serverless web application with AWS Serverless series.

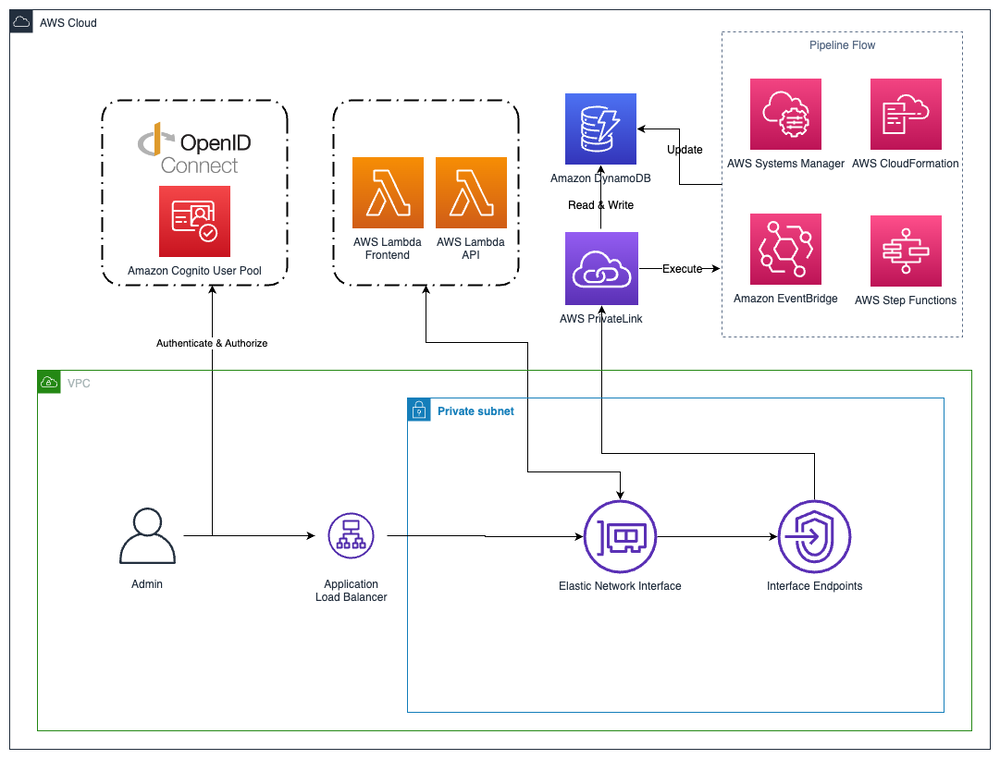

Another use case is deploying the web console within a private network, such as a VPC, to meet compliance requirements. In this architecture, CloudFront, S3, and API Gateway are replaced by an internal application load balancer and Lambda functions running within the VPC.

When implementing those two deployment modes, using the AWS Lambda Web Adapter allows for sharing the same code base to gracefully process the events sent from both API Gateway and Application Load Balancer.

Authentication and Authorization

The web console supports two deployment modes for authentication:

- If the AWS region has Amazon Cognito User Pool available, the solution can automatically create a Cognito user pool as an OIDC provider.

- If the region does not have Cognito User Pool, or the user wants to use existing third-party OIDC providers like Okta or Keycloak, the solution allows specifying the OIDC provider information.

For the API layer, a custom authorizer is used when the API is provided by API Gateway, and a middleware of Express framework is used when the API is provided by Application Load Balancer.

The backend code uses the Express framework to implement the authorization of the API, supporting both deployment modes.

Centralized web console

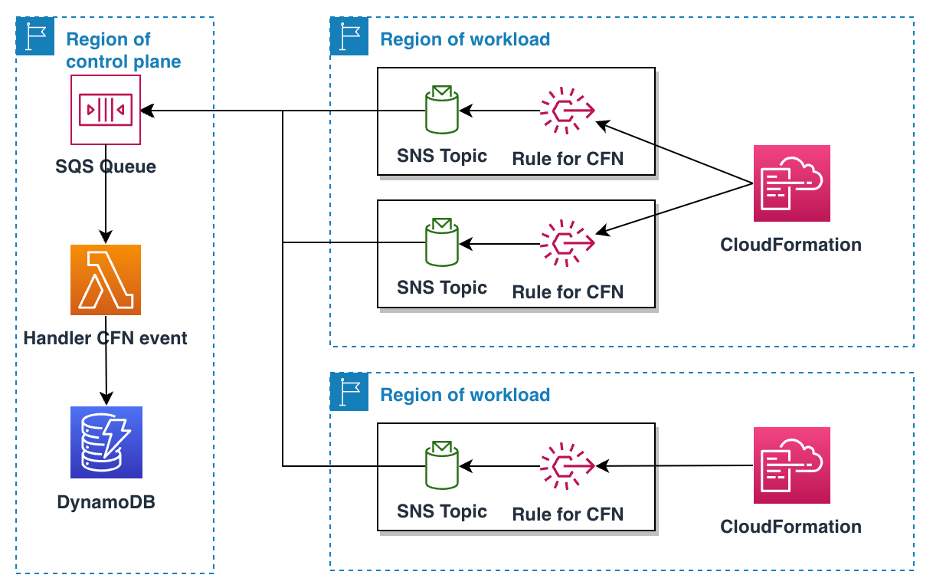

The clickstream tenet utilizes a single web console, which serves as the control plane, to manage one or more isolated data pipelines across any supported region.

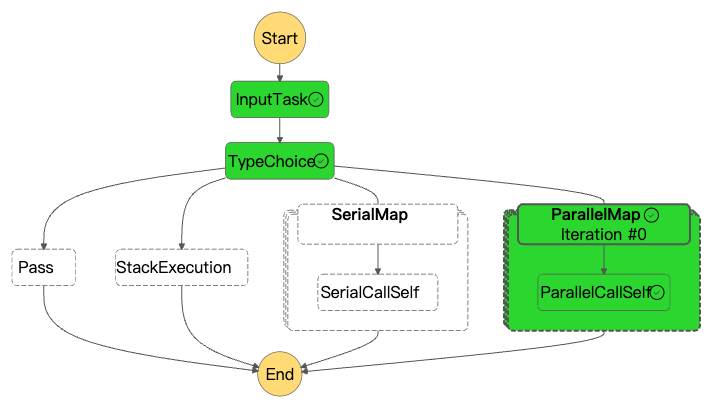

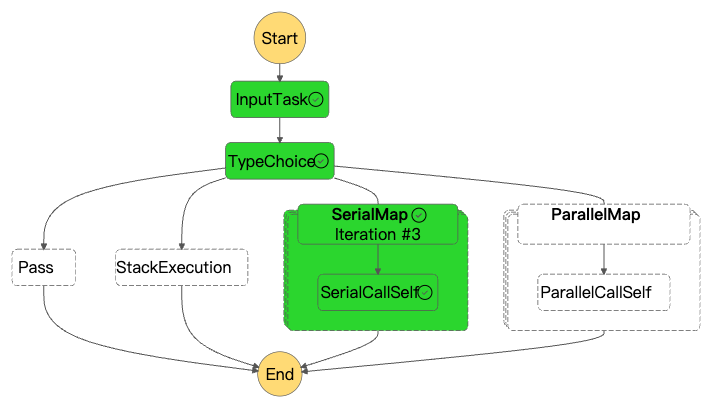

Pipeline lifecycle management

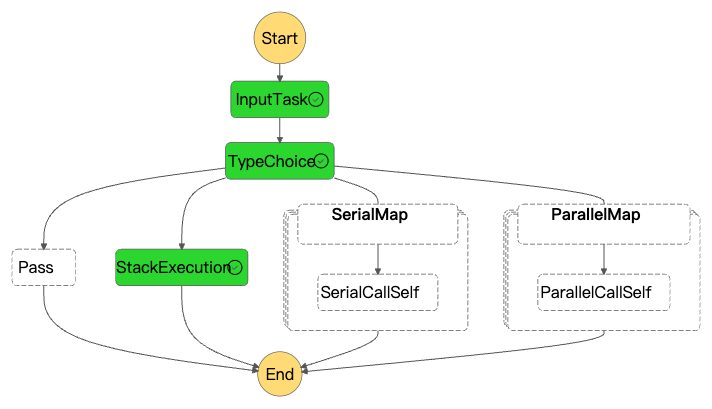

The web console manages the lifecycle of the project's data pipeline, which is composed of modular components managed by multiple AWS CloudFormation stacks. The web console uses Step Functions workflows to orchestrate the workflow for managing the lifecycle of those CloudFormation stacks. The workflows are abstracted as parallel execution, serial execution and stack execution.

And the status change events of CloudFormation stacks are emitted to SNS topic via EventBridge, then the message is deliverred to same region or cross region SQS queue for meeting the centralized web console.

Service availability checks

The web console provides a wizard that allows users to provision a data pipeline on the cloud based on their requirements, such as pipeline region, VPC, sink type, and data processing interval. Since AWS services have varying regional availability and features, the web console needs to dynamically display the available options based on the service availability, which it checks through the CloudFormation registry, as the pipeline components are managed by CloudFormation.

Below is a sample code snippet for checking the availability of key services used in the solution.

1import { CloudFormationClient, DescribeTypeCommand } from '@aws-sdk/client-cloudformation';

2

3export const describeType = async (region: string, typeName: string) => {

4 try {

5 const cloudFormationClient = new CloudFormationClient({

6 ...aws_sdk_client_common_config,

7 region,

8 });

9 const params: DescribeTypeCommand = new DescribeTypeCommand({

10 Type: 'RESOURCE',

11 TypeName: typeName,

12 });

13 return await cloudFormationClient.send(params);

14 } catch (error) {

15 logger.warn('Describe AWS Resource Types Error', { error });

16 return undefined;

17 }

18};

19

20export const pingServiceResource = async (region: string, service: string) => {

21 let resourceName = '';

22 switch (service) {

23 case 'emr-serverless':

24 resourceName = 'AWS::EMRServerless::Application';

25 break;

26 case 'msk':

27 resourceName = 'AWS::KafkaConnect::Connector';

28 break;

29 case 'redshift-serverless':

30 resourceName = 'AWS::RedshiftServerless::Workgroup';

31 break;

32 case 'quicksight':

33 resourceName = 'AWS::QuickSight::Dashboard';

34 break;

35 case 'athena':

36 resourceName = 'AWS::Athena::WorkGroup';

37 break;

38 case 'global-accelerator':

39 resourceName = 'AWS::GlobalAccelerator::Accelerator';

40 break;

41 case 'flink':

42 resourceName = 'AWS::KinesisAnalyticsV2::Application';

43 break;

44 default:

45 break;

46 };

47 if (!resourceName) return false;

48 const resource = await describeType(region, resourceName);

49 return !!resource?.Arn;

50};

Optimize package size

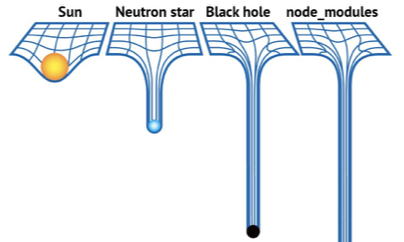

One crucial best practice when working with serverless functions like AWS Lambda is optimizing the package size. Just like in the analogy shown here, a smaller and more compact package results in faster performance, lower costs, and more efficient resource utilization. However, when we reach the node_modules folder, it's akin to a vast, sprawling structure, representing an unoptimized and bloated package size. This can lead to slower cold starts, higher compute costs, and potential issues with deployment limits. By following best practices such as minimizing dependencies, leveraging code bundling and tree-shaking techniques, and optimizing asset handling, you can achieve an optimized package size, ensuring your serverless functions are lean, efficient, and cost-effective.

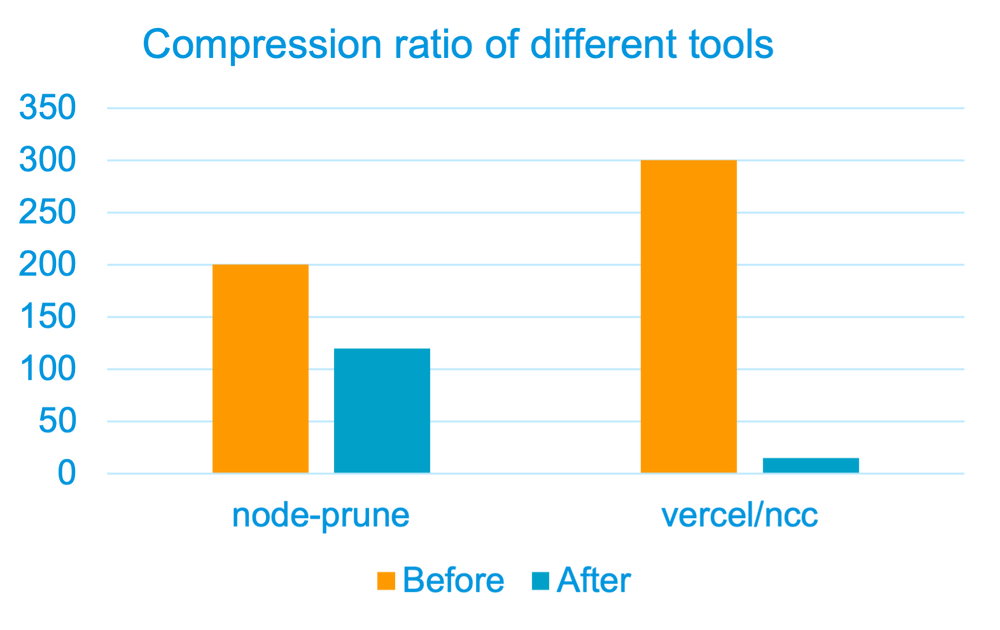

Originally, the solution uses node_prune to remove useless dependencies in node_modules for reducing the lambda size. The package size was reduced to 120MB from 200MB.

After introducing new dependencies, the original package size became 300MB. Node_prune could not reduce the size to meet the hard limit of Lambda package size 256 MB.

The team introduced a new open-sourced tool vercel/ncc for bundling and tree-shaking the node_modules. Vercel/ncc bundled the solution code and dependencies as a single file, it only 20MB from original 300MB. It’s amazing!

One more thing to keep in mind is to maintain the relative path of the configuration files used by your application.

Embedded QuickSight dashboards

Analytics Studio is a component of the clickstream web console that integrates Amazon QuickSight dashboards into our web application.

In the Exploration of Analytics Studio, the web console will automatically create temporary visuals and dashboards for each query owned by the QuickSight role/user used by the web console, ensuring these temporary resources remain invisible to other QuickSight users.